Qwen3: the new King of open source model

In the early hours of April 29, the highly anticipated Qwen3 model series was officially unveiled, drawing widespread attention from the global AI community.

The Qwen3 models continue to be open-sourced under the permissive Apache 2.0 license, allowing global developers, research institutions, and enterprises to freely download and use the models commercially via platforms such as HuggingFace, ModelScope, and more. Users can also access Qwen3's API services through Alibaba Cloud’s Bailian platform.

HuggingFace link: https://huggingface.co/collections/Qwen/qwen3-67dd247413f0e2e4f653967f

ModelScope link: https://modelscope.cn/collections/Qwen3-9743180bdc6b48

GitHub repository: https://github.com/QwenLM/Qwen3

Demo access: https://chat.qwen.ai/

Specifically, the Qwen3 series includes two MoE (Mixture of Experts) models and six dense models, each available in multiple variants such as base versions and quantized versions.

The MoE (Mixture of Experts) models include Qwen3-235B-A22B and Qwen3-30B-A3B, where 235B and 30B refer to the total number of parameters, and 22B and 3B refer to the number of active parameters.

The dense models include Qwen3-32B, Qwen3-14B, Qwen3-8B, Qwen3-4B, Qwen3-1.7B, and Qwen3-0.6B.

The following table presents the detailed parameters of these models:

Currently, the three larger models from the Qwen3 series have also been made available on the Qwen Chat web platform and mobile app.

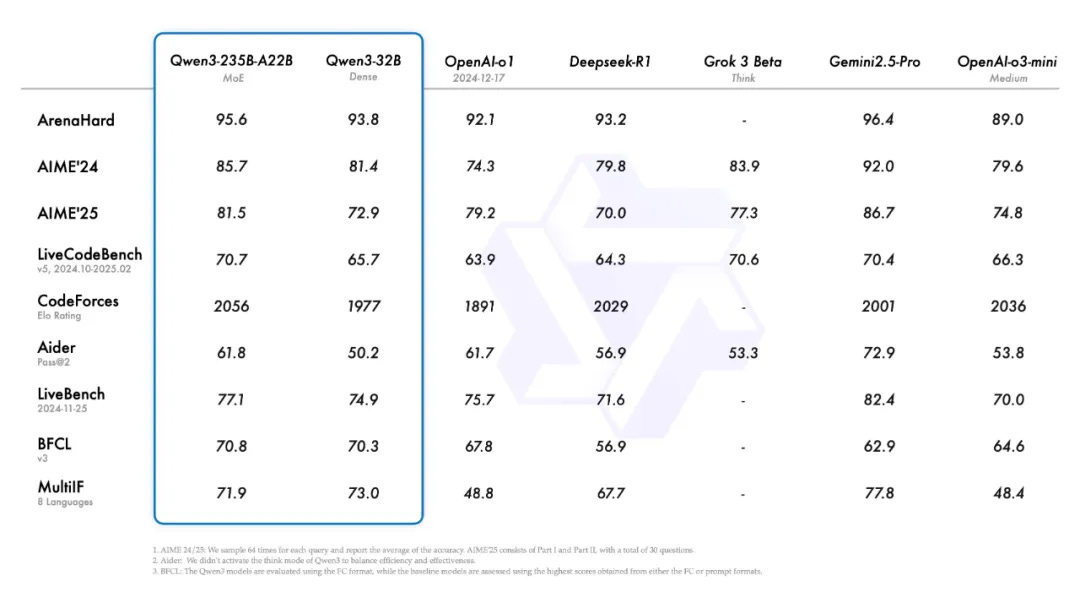

In terms of performance, the flagship model Qwen3-235B-A22B performs on par with other top-tier models such as DeepSeek-R1, o1, o3-mini, Grok-3, and Gemini-2.5-Pro across benchmarks in coding, mathematics, and general capabilities.

In addition, the smaller MoE model Qwen3-30B-A3B has only 10% of the active parameters compared to Qwen3-32B, yet delivers even better performance. Even smaller models like Qwen3-4B can rival the performance of Qwen2.5-72B-Instruct.

While significantly improving performance, Qwen3 has also greatly reduced deployment costs—requiring only four H20 GPUs to run at full capacity, with memory usage just one-third that of other models with comparable performance.

The development team also provided some recommended setups in their blog:

“For deployment, we recommend frameworks like SGLang and vLLM; for local use, tools such as Ollama, LMStudio, MLX, llama.cpp, and KTransformers are highly recommended. These options ensure users can easily integrate Qwen3 into their workflows, whether for research, development, or production environments.”

The team stated:

“The release and open-sourcing of Qwen3 will greatly accelerate research and development in large foundation models. Our goal is to empower researchers, developers, and organizations around the world to leverage these cutting-edge models to build innovative solutions.”

Qwen’s technical lead, Junyang Lin, also shared additional details about the development of Qwen3.

He noted that the team spent considerable time addressing less glamorous but critical challenges, such as how to scale reinforcement learning through stable training, how to balance data from different domains, and how to improve support for more languages.

He expressed his hope that users would enjoy the Qwen3 models and discover interesting insights through them.

He also mentioned that the team is moving into the next phase: training agents to enhance long-range reasoning while focusing more on real-world tasks.

Of course, the development team also plans to release the technical report and training recipes for the Qwen3 models in the future.

User Feedback and Hands-on Testing

Just like the previous generation of Qwen models, the release of Qwen3 has once again captured the attention of the global AI and open-source communities, and the overwhelming response has been highly positive.

So how does it actually perform?

Synced (机器之心) also conducted some simple hands-on tests.

Starting with a basic reasoning question, Qwen3-235B-A22B handled it with ease, just as expected.

Next, we tried a more complex programming task: writing a pixel-style Snake game.

There was an additional requirement — a honey badger would chase the snake controlled by the player, and if the snake was bitten, it would lose half of its length.

The game would end if the snake hit a wall, bit itself, or its length dropped below two.

Qwen3-235B-A22B took about three minutes to complete this task.

A quick playthrough showed that the game was basically playable, though there were some bugs—for example, the honey badger moved too fast.

However, considering that this was a one-shot result generated by Qwen3-235B-A22B with only a simple prompt, the performance was entirely acceptable.

With more refined prompt engineering and iterative optimization, even better results can surely be achieved.

We also conducted a simple test of Qwen3’s smallest model, Qwen 0.6B, using Ollama.

Three Core Highlights

The Qwen3 models bring significant enhancements across multiple dimensions.

First, they support two distinct reasoning modes:

Reasoning Mode:

The model reasons step-by-step and produces a thoughtful final answer, especially suited for complex problems requiring deep analysis.Non-Reasoning Mode:

The model provides rapid, near-instant responses, ideal for simpler tasks where speed is prioritized over depth.

This flexibility allows users to control the model's "degree of thinking" based on the task. Complex problems can be handled with extended reasoning steps, while simple questions can be answered quickly without unnecessary delay.

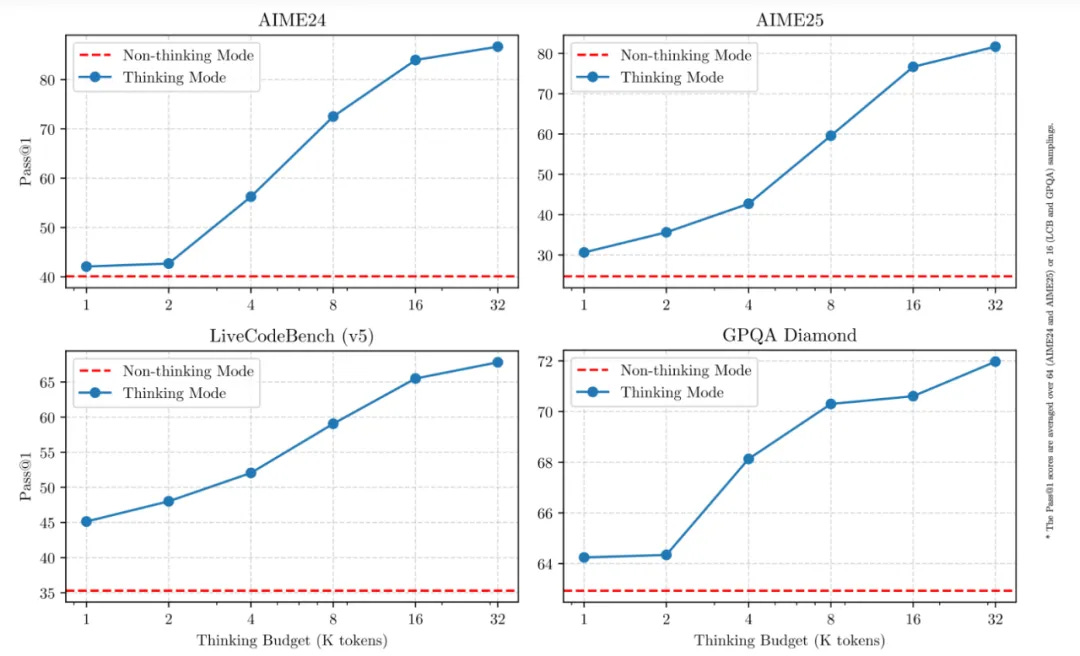

Importantly, combining these two modes greatly improves the model’s ability to stably and efficiently manage "reasoning budgets".

The scalable and smooth performance improvements shown by Qwen3 are directly linked to how computational reasoning resources are allocated.

This design enables users to more easily allocate specific budgets for different tasks, achieving a better balance between cost efficiency and reasoning quality.

The following figure shows the trends in reasoning budget usage between the non-reasoning and reasoning modes across benchmarks such as AIME24, AIME25, LiveCodeBench (v5), and GPQA Diamond.

Second, Qwen3 models now support a broader range of languages.

Currently, Qwen3 models support 119 languages and dialects.

The enhanced multilingual capabilities open up new possibilities for international applications, enabling a wider range of global users to experience the full power of the models.

Third, enhanced agent capabilities.

Today, agent capabilities have become a major focus area in the development of large models, especially with the recent introduction of the MCP (Model Context Protocol), which has significantly improved the adaptability and flexibility of agents, greatly expanding their application scenarios.

In this release, Qwen3 models have seen enhanced agent and coding abilities, including stronger support for MCP.

360 Trillion Tokens for Pretraining

Hybrid Reasoning Achieved in Post-training

In terms of pretraining, Qwen3’s dataset has been significantly expanded compared to Qwen2.5.

While Qwen2.5 was pretrained on 180 trillion tokens, Qwen3 was trained on almost double that amount, reaching approximately 360 trillion tokens across 119 languages and dialects.

To build such a massive dataset, the development team not only collected data from the web but also extracted information from PDF documents.

They used Qwen2.5-VL to extract text from these documents and Qwen2.5 to refine and improve the quality of the extracted content.

Additionally, to enrich the amount of mathematics and coding data, the team synthesized new data using two specialized expert models, Qwen2.5-Math and Qwen2.5-Coder.

This synthetic data included textbooks, Q&A pairs, code snippets, and more.

Specifically, the pretraining process was divided into three phases:

Phase 1 (S1):

The model was pretrained on over 300 trillion tokens with a context length of 4K tokens, building fundamental language skills and general knowledge.Phase 2 (S2):

The dataset was enhanced by increasing the proportion of knowledge-intensive data (such as STEM, programming, and reasoning tasks), followed by pretraining on an additional 50 trillion tokens.Final Phase:

High-quality, long-context data was used to extend the context length to 32K tokens, ensuring the model can effectively handle much longer inputs.

Thanks to improvements in model architecture, expanded training data, and more efficient training methods, the overall performance of Qwen3 Dense base models is comparable to larger Qwen2.5 base models.

For example, Qwen3-1.7B/4B/8B/14B/32B-Base perform similarly to Qwen2.5-3B/7B/14B/32B/72B-Base models.

Notably, in areas such as STEM, coding, and reasoning, Qwen3 Dense base models even outperform the larger Qwen2.5 models.

Moreover, Qwen3 MoE base models achieve comparable performance to Qwen2.5 Dense models while using only 10% of the active parameters, resulting in significant savings in both training and inference costs.

Meanwhile, Qwen3 has also undergone optimization during the post-training phase.

To develop a hybrid model capable of both deep reasoning and rapid response, the development team implemented a four-stage training process, which includes:

(1) Long chain-of-thought cold start,

(2) Long chain-of-thought reinforcement learning,

(3) Reasoning mode fusion, and

(4) General reinforcement learning.

In the first stage, the model was fine-tuned using diverse long chain-of-thought data, covering a wide range of tasks and domains such as mathematics, coding, logical reasoning, and STEM-related problems.

This process aimed to equip the model with fundamental reasoning capabilities.

The second stage focused on large-scale reinforcement learning, using rule-based rewards to enhance the model’s ability for exploration and deeper problem-solving.

In the third stage, the model was fine-tuned on a combined dataset containing both long chain-of-thought data and commonly used instruction tuning data.

This step integrated the non-reasoning mode into the reasoning model, ensuring a seamless combination of deep reasoning and rapid response capabilities.

In the fourth stage, reinforcement learning was applied across more than 20 general domains, including tasks related to instruction following, format adherence, and agent capabilities, further strengthening the model’s general abilities and correcting undesirable behaviors.

Qwen Becomes the World's Leading Open-Source Model

The release of Qwen3 marks another major milestone for Alibaba’s Tongyi Qianwen initiative.

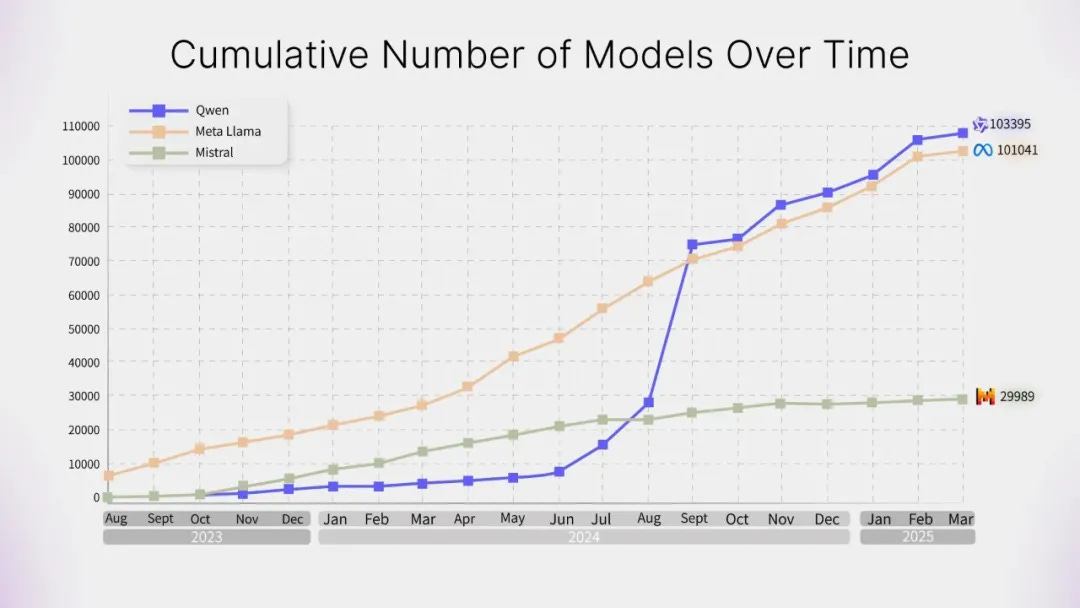

Compared to the community feedback received by the Llama 4 model series, Qwen has now clearly emerged as the world’s leading open-source model — and this conclusion is backed by data.

According to available information, Alibaba has open-sourced over 200 models, with global downloads exceeding 300 million times, and Qwen-derived models have surpassed 100,000 in number, officially overtaking Llama to become the largest open-source model family worldwide.

Source: https://mp.weixin.qq.com/s/gvWJMfy2ah4IHqyO-tP8MA