A Single Sentence From DeepSeek Sends Chinese Chip Stocks Soaring

On August 21, DeepSeek officially released its V3.1 model. A single additional comment posted by the company sparked a surge in China’s domestic computing power stocks.

After lying dormant for over two months, Hangzhou-based DeepSeek yesterday rolled out its next-generation model, DeepSeek-V3.1. In the official WeChat blog post, the company noted: “DeepSeek-V3.1 uses UE8M0 FP8 Scale for parameter precision.”

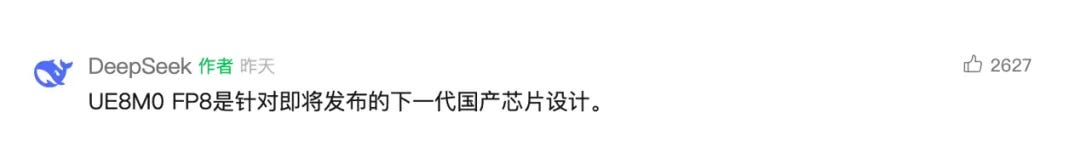

Perhaps sensing that this description of computational support was too cryptic, the company itself added in the comments: “UE8M0 FP8 is designed for the upcoming next-generation domestic chips.”

That one sentence was enough to set off a frenzy in the A-shares market. Overnight, more than 2,800 stocks rose. Computing power stocks across the board exploded, with multiple names hitting the daily limit-up. Chip stocks also surged: Cambricon hit its limit-up and set a new all-time high, SMIC jumped 14%, and Hygon Information also hit the limit-up.

Analysts suggested the market wasn’t simply chasing a slogan, but saw in DeepSeek’s words the real possibility that China’s domestic chips are breaking into the international frontier of computing power. For many, this was a rare moment of resonance across China’s AI hardware value chain.

The attention on UE8M0 FP8 comes from its potential to solve long-standing performance bottlenecks in domestic chips running large models. FP8 is an 8-bit floating-point format that drastically reduces memory bandwidth pressure in AI training and inference. Yet most Chinese chips only support FP16 natively, which means models like DeepSeek’s would suffer performance cuts.

UE8M0 changes this: it’s a microscaling format exponent, designed with an ultra-simple 8-bit exponent-only representation. It avoids heavy floating-point operations while expanding dynamic range, making training faster, more efficient, and less prone to precision loss. In practice, it reduces memory bandwidth overhead by 75%, a game-changer for domestic chips still catching up in high-bandwidth memory. Put simply, UE8M0 FP8 acts like a “fast-forward button and power-saver mode” for Chinese AI chips—covering past weaknesses and opening a path to next-gen competitiveness.

From an industry perspective, DeepSeek’s statement was not just a technical one, but a potential endorsement of the domestic ecosystem. For years, the tight dependence of China’s best-performing models on NVIDIA hardware was seen as a vulnerability. This release has been widely interpreted as a gradual “decoupling,” with DeepSeek openly backing domestic chip development.

The adoption of UE8M0 FP8 also means domestic players are now delivering end-to-end capabilities—floating-point formats, compiler optimization, and framework adaptation—showing real progress from years of co-development between software and hardware.

As for which “next-generation domestic chip” DeepSeek was hinting at, many thought of Huawei first. But according to Phoenix News, nearly all of China’s leading chipmakers are in talks with DeepSeek. Several already support FP8. Cambricon, whose shares surged the most, has FP8-enabled Siyuan 590 and 690 series. Moore Threads’ MUSA architecture also natively supports FP8 tensor acceleration and is well aligned with UE8M0 FP8 Scale, doubling FLOPs and improving bandwidth efficiency compared with FP16.

Since early this year, DeepSeek has been pressing the accelerator on China’s AI chip industry, whether it’s proactive or passive. In the long run, against the backdrop of the U.S. AI Action Plan vowing to push the American AI tech stack abroad, China is also racing to build its own. Accelerating the deep integration of domestic large models with homegrown chips will be a defining industry trend for quite some time.

Yes, this is not unusual and aligns with broader industry trends. DeepSeek was not trying to hint at any specific progress on domestic semiconductor manufacturing, just show that it is aligned with what is already going on in the industry....