A Coversation between China's Big Four in Foundation Models

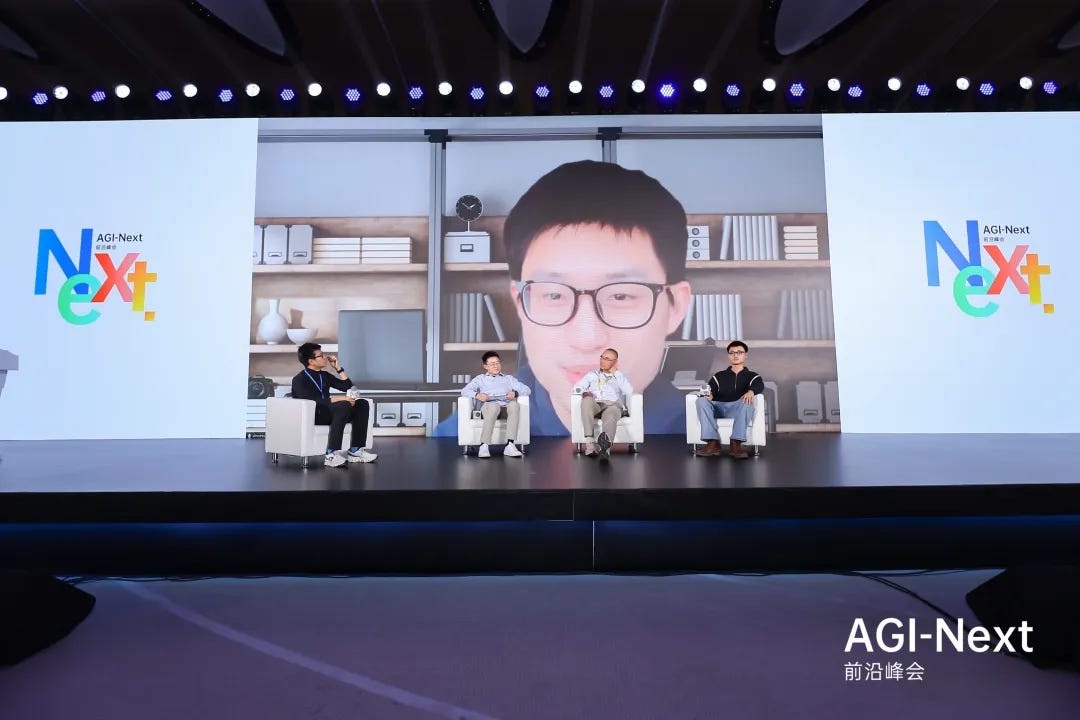

On January 10, 2026, at the AGI-Next Frontier Summit—jointly, several key players representing distinct niches within China’s large model ecosystem gathered around the same table, a rare occurrence.

The summit, initiated by the Beijing Key Laboratory for Foundation Models at Tsinghua University, aimed to explore emerging paradigms and key challenges in the global development of AGI.

Among them were Professor Tang Jie, who had just led Zhipu AI to a successful IPO on the Hong Kong Stock Exchange; Yao Shunyu, formerly of OpenAI and now making his first public appearance in his new role at Tencent; Lin Junyang, the technical lead behind Alibaba’s Qwen model; and Professor Yang Qiang, a foundational figure in federated learning known for his work bridging academia and industry.

At the summit, Tang Jie of Zhipu AI was the first to pour cold water on the industry's enthusiasm. Despite the loud momentum behind open-source models in China, he stated bluntly: “The gap between Chinese and U.S. large models may not have narrowed.” As the marginal returns of the Scaling Law begin to diminish, the brute-force approach of piling on computational power is losing its edge.

Tang proposed a new evaluation paradigm—“Intelligence Efficiency”—measuring how much intelligence gain can be achieved per unit of resource invested. He suggested this could become the decisive factor in the next phase of competition.

Will the world’s leading AI company be from China in the next three to five years? Opinions differ.

Yao Shunyu is very optimistic — he thinks once a technical path is validated, Chinese teams can catch up fast and even outperform in certain areas. He believes history could repeat itself, just like what happened with EVs and manufacturing.

That said, he also warns that to truly lead, China needs to break through some big hardware bottlenecks — especially around compute. If we can combine advantages in infrastructure, energy, and chips, that could give China a real shot at leading the pack.

Lin Junyang is more cautious. He points out that China’s compute resources are already stretched thin, mostly used to deliver on current projects. Meanwhile, companies like OpenAI have plenty of GPUs for exploring future architectures. He calls this the gap between “rich-world innovation” and “resource-strapped innovation.” But he also notes that this constraint forces Chinese teams to optimize their algorithms and infrastructure in ways that wouldn’t happen if they had unlimited resources.

On the talent and culture side, several speakers agree that things are looking up.

Both Tang Jie and Yang Qiang highlight the next generation — the 90s and 2000s kids — as unusually bold and exploratory. That spirit of risk-taking hasn’t always been common in China’s tech ecosystem, which historically leaned toward safer bets. But that’s changing. With better education and deeper talent pools, these young builders may end up driving China’s real AI leap forward.

Still, talent alone isn’t enough to go from follower to frontrunner. Yao Shunyu points out three big challenges:

First, hardware — without solving the chip and lithography choke points, real independence is hard.

Second, commercialization — China’s B2B market is less mature than the US, and willingness to pay remains weak.

And third, culture — Chinese teams are great at executing efficiently, but we still lack a deep bench of people willing to bet on totally new paradigms.

Tang Jie adds that the government needs to keep improving the business environment, easing pressure on startups, and leveling the playing field between big firms and small. That would free up more smart people to focus on real innovation. But he also reminds founders: don’t wait around for the “perfect environment” — it’ll never come. What matters is being willing to go for it, and sticking it out for the long run.

Below is the full transcript of the conversation:

Li Guangmi (Moderator):

In 2025, we saw explosive growth in China’s open-source models and in the coding space. Meanwhile, in Silicon Valley, a clear shift began to emerge—companies stopped chasing every direction and started focusing on verticals. For example, Sora is all in on video; others are specializing in coding or agents.Shunyu, as someone who has worked across both the U.S. and China, how do you view this “divergence”? Especially between the consumer (To C) and enterprise (To B) paths—what are your key observations?

Yao Shunyu:

Great to be here—thanks for having me. On the idea of “divergence,” I’d say I have two main observations:

First, there’s a growing gap in how value is perceived between To C and To B. Second, we’re seeing a divergence in integration strategies—vertical integration vs. layered application models.Let me start with the To C vs. To B difference—it’s huge.

In the consumer space, people mention ChatGPT and feel like, compared to last year, the experience hasn’t changed all that much. The reality is, most regular users don’t need a model that can reason through things like Galois theory. To C products still mostly feel like smarter versions of search engines, and users often need to learn how to “prompt” the model in order to unlock its potential.

In the enterprise space, though—especially in coding—things are changing fast. It’s reshaping the entire computer industry. The way we interact with machines is shifting: people don’t write code anymore—they speak natural language and let the system handle it. In To B, intelligence directly translates to productivity.

And companies are willing to pay a premium for that intelligence. Imagine a well-paid engineer with 10 daily tasks: a top-tier model might get 9 right, while a weaker one only gets 5. Fixing those 5 wrong answers costs time and money. That’s why, in enterprise settings, the gap between the best and the rest will keep widening—and people will only pay for the best.

Now, on integration strategies:

For To C, vertical integration is still the dominant approach. Think ChatGPT or Doubao—the model and the product are tightly coupled and iterate together.

But for To B, we’re seeing a shift toward layering: separating the foundation model from the application layer. People used to believe vertical integration was the best approach, but we’re learning that enterprise scenarios need a super-strong foundation model—that’s not something most product teams can build. Meanwhile, the application layer has to build complex context environments around the model. In areas like coding agents, even as models get more powerful, it’s the tooling and context around them that unlock real productivity.

Li Guangmi (Moderator):

Shunyu, now that you’ve joined Tencent, how do you see this playing out in the Chinese market? What are your top focus areas—and any key themes to share?Yao Shunyu:

Tencent has strong To C roots, so a big question for us is: how can AI deliver real value to users?For To C, the key isn’t just model parameters—it’s context.

A lot of times, answering a user’s question well doesn’t come down to having a bigger model or better RLHF—it comes from having better external inputs.

For example, take the classic question: “What should I eat today?”

The core model’s answer might not change much from last year to this year. But if the model knows it’s cold today, which neighborhood I’m in, what my spouse likes to eat, or what we chatted about last week—that changes everything.

So imagine forwarding your WeChat chat history to a bot like Yuanbao—suddenly, the model has much richer context to work with, and the value to the user increases significantly.In short, leveraging extra context, paired with a strong model, is key to unlocking better consumer AI experiences.

For To B, the advantage is in “native data.”

Building enterprise-grade productivity tools like coding agents in China is hard. But at a big company like Tencent, with tens of thousands of employees, we’re thinking: how do we serve ourselves first?

Startups often rely on externally labeled data or APIs. Our edge is that we already have rich, real-world use cases. We have massive internal demand and real product environments to test in.

And we also have real-world data capture: a startup might need to hire someone to create or label synthetic coding data. But we can tap into the actual development and debugging processes of our thousands of engineers—that’s way more valuable than synthetic data.To sum it up: For To C, it’s about going deep on context.For To B, it’s about leveraging internal use cases and real-world data. That’s where we’re focused right now.

Li Guangmi:

Next, I’d like to turn to Junyang. Alibaba Cloud has long been strong in the To B (enterprise) space, and you’ve previously mentioned that multimodal capabilities might lean more toward To C (consumer). How do you see the future positioning—or “ecological niche”—of Qwen?Lin Junyang:

In theory, I can’t comment directly on company strategy. But I do think a company’s DNA is shaped generation by generation. Now that Shunyu has joined Tencent, maybe Tencent will start showing some “Shunyu DNA,” too (laughs).Whether it’s To B or To C, the core is always the same: solving real problems. So the fundamental question becomes—how do we make the human world better?

This “divergence” we’re seeing is a natural process. Even within consumer products, we’ll likely see further differentiation—some products will lean toward healthcare, others toward legal domains.

As for positioning, the reason I admire Anthropic isn’t just their strength in coding—it’s how deeply they engage with B-side (enterprise) clients.

In my conversations with several U.S. API companies, many were surprised at how massive the token consumption is in real coding use cases. That level of demand hasn’t yet materialized in China. Or take Anthropic’s recent move into finance—it came directly from conversations with clients, where they uncovered genuine opportunities.

So to me, the “divergence” of model companies is simply a natural response to market demand. I prefer to trust in the underlying logic of AGI development—let it evolve organically, as long as it truly serves human needs.

Whether it’s divergence or something else, I believe the path forward should stay grounded in our evolving understanding of AGI. We should focus on doing what AGI is meant to do.

Li Guangmi:

Thank you, Junyang. Professor Yang Qiang, what’s your take on this “divergence” discussion?Yang Qiang:

I’m more focused on the divergence between industry and academia. Industry has been racing ahead, while academia stands back and watches. Now that large models are entering a steady state, academia needs to step up—to tackle the deep scientific questions that industry doesn’t have time to solve.Questions like: Given a certain amount of computational and energy resources, how far can intelligence really go? How should we balance resources between training and inference?

In some of my early experiments, I found that too much memory could actually introduce noise and interfere with reasoning. So where’s the balance?

Then there’s hallucination and Gödel’s incompleteness theorem: large models can’t prove their own correctness, and hallucinations may never be fully eliminated. It’s like risk and return in economics—there’s no such thing as a free lunch. These are challenges for both mathematicians and algorithm designers.

When agents are chained together, errors compound exponentially. I recommend people read Why We Sleep—it explains how the human brain clears noise through sleep to maintain function. Maybe large models need something like “sleep” too. That line of thought could inspire computational architectures beyond Transformers.

Li Guangmi:

Professor Tang, Zhipu has been strong in both coding and long-horizon agent research. What’s your perspective on this divergence?Tang Jie:

Looking back at 2023, we were one of the first teams in China to launch a chat-based system. Our instinct was simple: get it online fast. But by the time we actually launched around August or September, there were already a dozen big models on the market—and none of them attracted as many users as expected.After reflecting on that experience, we realized the core issue: chat alone doesn’t actually solve real problems. At the time, we predicted that chat might replace search, but in reality, while many people now use models to assist with searching, Google wasn’t replaced—instead, Google reinvented itself.

From that perspective, with new players like DeepSeek emerging, the era of the “chat wars” is over. We need to think hard about what the next strategic bet should be.

At the beginning of 2025, we had intense internal debates. In the end, we decided to bet on coding. We went all in—and it turns out, we made the right call.

Li Guangmi:

It seems everyone is playing to their strengths based on their unique resources and backgrounds.Let’s move to our next topic: new paradigms. We’ve been in the pretraining era for three years now, and reinforcement learning has become widely accepted. The next wave everyone’s talking about is self-learning.

Shunyu, having worked at OpenAI, what do you think could be the third paradigm?Yao Shunyu:

“Self-learning” is already widely accepted in Silicon Valley, but I’d offer two thoughts:First, it’s not a single methodology—it’s about how tasks are defined based on data. Things like personalized chat, code generation that adapts to specific documentation, or scientific exploration like a PhD researcher—these are all different forms of self-learning.

Second, it’s already happening. ChatGPT is adapting to users’ styles. Claude can now write 95% of its own project code. Today’s AI systems are really made up of two parts: the model, and the codebase it uses. And AI is already starting to help write the code it needs to run and deploy itself.

It’s less of a sudden leap and more of a gradual evolution.

Li Guangmi:

Let me press you on that—what key conditions or bottlenecks still need to be overcome to realize true self-learning?Yao Shunyu:

Honestly, we’re already seeing early signs in 2025. Take Cursor, for example—they’re training on live user data every few hours. The reason it doesn’t feel earth-shattering yet is because foundation models still have capability limits.The real bottleneck is imagination. We understand what reinforcement learning can do—we’ve seen things like O1 solving math problems. But with self-learning, we haven’t even defined what the “target task” looks like.

Is it an AI trading system that consistently makes money? Or one that solves long-standing scientific mysteries?

Before we can verify it, we first need to imagine it.Li Guangmi:

If a new paradigm emerges by 2027, which global company is most likely to lead it?Yao Shunyu:

OpenAI still has the highest probability. Sure, commercialization has diluted some of its experimental DNA, but it’s still the most fertile ground for new paradigms to emerge.Li Guangmi:

Junyang, what’s your view on the 2026 paradigm shift?Lin Junyang:

Taking a more pragmatic view, I’d say the reinforcement learning (RL) paradigm is still in its early days. It hasn’t been fully scaled in terms of compute, and the global infrastructure bottleneck hasn’t been resolved yet.As for the next paradigm—I agree the core will be self-learning.

I was chatting with a friend recently, and we both noted that simple human-AI interaction doesn’t necessarily make models smarter. In fact, longer context often makes models dumber.That’s why we need to think about test-time scaling: can a model become smarter simply by outputting more tokens and thinking longer? OpenAI’s “o” series seems to suggest yes. Whether it’s through coding or AI scientist use cases, where models try to tackle problems humans haven’t solved yet, these are incredibly meaningful directions.

Another critical element is stronger agency. Today’s models need to be prompted by humans—what if in the future, the environment prompts the model, and it decides on its own what to do?

That introduces a risk that’s even bigger than content safety: behavioral safety.

I’m less worried about models saying the wrong thing, and more about them doing the wrong thing—like hypothetically throwing a bomb into a conference venue. It’s like raising a child—you have to equip them with abilities while also instilling the right values.So yes, there are risks—but I believe self-learning with real agency is a critical next step.

Li Guangmi:

Junyang, between self-improvement and automated AI researchers, where do you think we’ll see the first breakthroughs in self-learning?Lin Junyang:

Honestly, I think “automated AI researchers” might not even require that much self-learning. Training AIs may become a standardized, even replaceable process. What really matters is how deeply AI understands the user.In the past, with recommender systems, the more input users gave, the simpler the algorithm needed to be to deliver good results. In the AI era, the challenge is using that input to make AI a tool that really knows you.

Recommender systems had clear metrics—click-through rates, purchase rates. But now AI is touching all aspects of daily life, and we lack a unified metric to judge how well it’s working. That’s a big technical challenge right now.

Li Guangmi:

Let’s talk about memory and personalization—do you think we’ll see any major leaps in 2026?Lin Junyang:

Personally, I think technological progress is linear, but human perception is exponential.Take ChatGPT—its progress feels linear to us developers, but to the public it was mind-blowing. Right now, everyone is scrambling to improve memory, but all it’s doing is “remembering past events.” You still have to say your name every time. That’s not really intelligent.

True memory should feel like an old friend—someone who understands instantly, without you having to re-explain everything. I think we’re still about a year away from that threshold.

Every day, we look at our work and think it’s kind of clunky—there are bugs and inefficiencies. But it’s these small, incremental steps—like slightly better coding—that are creating huge productivity gains. There’s massive potential in how algorithms and infrastructure come together.

Li Guangmi:

Professor Yang, over to you.Yang Qiang:

My work has long focused on federated learning, which is all about multi-center collaboration. Many real-world scenarios have limited resources and high privacy requirements. I think we’ll increasingly see collaboration between general-purpose foundation models and localized or domain-specific models.Take the work Xuedong Huang is doing at Zoom—they’re building a big foundation and allowing others to plug into it. That’s a model that protects privacy while still leveraging the power of large models. I think this will become increasingly common in sectors like healthcare and finance.

Li Guangmi:

Professor Tang, final word to you.Tang Jie:

Whether it’s continual learning, memory, or multimodality—any of these could spark the next paradigm shift.Why now? In the past, industry was far ahead of academia. I remember returning to Tsinghua a couple of years ago and many professors had zero GPUs. Meanwhile, companies had tens of thousands. The gap was a thousandfold.

But by late 2025 and early 2026, that’s changed. Universities have caught up in compute. Professors in both Silicon Valley and China are diving into model architecture and continual learning. Industry no longer has total dominance. The compute gap may still be 10x, but academia’s “innovation gene” is now bearing fruit.

The deeper reason is efficiency bottlenecks. Innovation often erupts when we pour in resources but stop seeing gains.

Scaling still brings value—you can go from 10TB of data to 30TB or 100TB. But we have to ask: Is it worth it? What’s the return on that 1 or 2 billion dollars? If the improvement is tiny, the math doesn’t work. Same goes for re-training the foundation and RL loops every time—it’s just not efficient.

What we need is a new paradigm to measure return: Intelligence Efficiency.

If your goal is to increase the upper limit of intelligence, brute-force scaling is the dumbest way to do it. The real challenge is: how do we achieve the same gains with less scaling?

That’s why I truly believe a paradigm shift is coming in 2026. And we’re working hard to make sure we’re the ones leading it.

Li Guangmi:

People have high expectations for agents. Some believe that by 2026, agents could handle one to two weeks’ worth of human work.

Shunyu, you’ve spent a lot of time researching agents—realistically, do you think this is achievable? From a model company’s perspective, what’s your take?Yao Shunyu:

There’s a clear logic split between To B and To C.In the To B (productivity) space, things are simple: stronger models = more tasks completed = greater value. It’s an upward curve. Just focus on good pretraining and posttraining—that’s it.

In To C, DAU (daily active users) is often not positively correlated with model intelligence—sometimes even negatively correlated.

Besides the model itself, there are two big bottlenecks.

First is deployment. From my experience in To B companies, even if the model doesn’t get any smarter, just deploying it better in real-world environments can bring 10x or even 100x returns. Right now, AI’s impact on GDP is still under 1%, so the room for growth is huge.

Second is education. It’s not about humans being replaced by AI—it’s about people who know how to use tools replacing those who don’t. Instead of obsessing over model parameters, it’s more meaningful—at this stage in China—to teach people how to use Claude, Kimi, Zhipu, and other tools effectively.

Li Guangmi:

Junyang, Tongyi Qianwen is also building an agent ecosystem and supporting general-purpose agents. Can you share some thoughts?Lin Junyang:

This touches on product philosophy. Yes, tools like Manus have been very successful—but I personally believe in “model as product.”I once had a conversation with folks from Thinking Machine Lab. They mentioned this great idea: “researcher is product.” Researchers should act like product managers—end-to-end builders solving real-world problems.

I think the next wave of agents will embody that. It’s closely tied to self-evolution and autonomous learning. Future agents shouldn’t just be interactive (Q&A style)—they should be delegable. You give them a fuzzy, general instruction, and they’ll make decisions and evolve independently over a long execution cycle.

That sets a high bar for model capabilities and test-time scaling.

Another critical issue is environmental interaction. Right now, most agents are stuck in digital environments—way too limited.

Some of my friends in AI for Science are working on drug discovery with AlphaFold. Running simulations on computers isn’t enough—they need robots doing real experiments to get feedback. Right now, that part is still heavily manual or outsourced. It’s inefficient.True, long-term agent use cases only emerge when AI can interact with the physical world and form a closed feedback loop.

Tasks confined to screens may be solved this year, but over the next 3–5 years, the real excitement will come from the intersection of agents and embodied AI.Li Guangmi:

Final tough question—are general-purpose agents a startup opportunity, or is it just a matter of time before model companies dominate?Lin Junyang:

Just because I work on foundation models doesn’t mean I get to be a startup mentor for this.I’ll borrow something Peak (Manus co-founder) said: The most interesting part of building general agents is solving long-tail problems. That’s also the most compelling aspect of AGI—helping people solve problems that have no clear solution elsewhere.

Popular problems (like top product recommendations) are easy. True AGI means solving those edge-case issues that no one else has figured out.

So, if you’re a great product wrapper—better than the model company itself—then you’ve got a shot. But if you lack that confidence, model companies will likely win out. When they hit a wall, they can just “burn GPUs” and train deeper models. That’s a low-level advantage—it’s hard to compete with. So really, it depends on your approach.

Li Guangmi:

But when it comes to long-tail problems, don’t model companies already have the compute and data to move quickly?Lin Junyang:

Exactly—that’s what makes reinforcement learning (RL) so exciting today. It’s now way easier to fix model issues than before.For instance, in To B, clients used to struggle with fine-tuning. They had to worry about data balance, but their data quality was often terrible—just pure junk. It gave us headaches.

Now with RL, as long as there’s a query—even without perfect labeling—a bit of training can fix the issue. Previously, it took tons of cleaning and annotating. Today, RL does it faster, cheaper, and the results are easy to merge back into the model.

Li Guangmi:

Professor Yang, your thoughts?Yang Qiang:

I think agent evolution comes in four stages. The key variable is: who controls the goal and the plan—humans or the AI?Right now, we’re at stage one: humans define the goal and break it down into steps. To be blunt, most agents today are just glorified prompt languages.

But I predict a turning point: Foundation models will learn from observing how humans work, using real-world process data.

Eventually, agents will become fully endogenous systems—goals and plans will be defined by the models themselves. That’s when they become truly intelligent.

Li Guangmi:

Professor Tang, closing thoughts?Tang Jie:

There are three key factors that will shape where agents go: 1) Value: How valuable is the problem the agent is solving? Early GPTs failed because they were too simplistic—just prompt wrappers. Agents must tackle real, complex problems to be useful.2) Cost and Boundaries: Here’s the paradox—if a problem can be solved with a quick API tweak, the foundation model will absorb it.

So applications need to find their niche before the base model catches up.3) Speed: This is all about timing.If we can go deeper into a use case and polish the experience ahead of the curve—even by six months—we can survive. Speed is the name of the game. If our code is solid, we might just outpace others in coding or agent development.

Li Guangmi:

Final question—looking ahead 3 to 5 years, what’s the probability that the world’s leading AI company could be Chinese? What do we need to go from “follower” to “leader”?Yao Shunyu:

I’m very optimistic—it’s highly likely.History shows that once a technical path is validated, Chinese teams can rapidly replicate and even outperform in specific areas—look at EVs or manufacturing.

But to truly lead, we must overcome key hurdles, especially hardware bottlenecks—like lithography and compute. If we can break through compute limits, combined with China’s strengths in power supply and infrastructure, it would be a massive advantage.

On the business side, the U.S. To B market is more mature and customers are more willing to pay. Chinese companies either need a better domestic commercialization environment or the ability to compete globally.

Most importantly, it’s about talent and culture. China has a deep talent pool, but we still lack enough people willing to explore new paradigms. We’re amazing at optimizing within existing frameworks—doing more with fewer GPUs—but what’s missing is a spirit of defining new paradigms and taking big risks.

Li Guangmi:

Follow-up: in terms of research culture, how do Chinese labs compare with OpenAI or DeepMind? Any advice?Yao Shunyu:

Research cultures vary greatly—even labs in the U.S. can be more different from each other than from labs in China.Two big differences in China:

1) Preference for certainty. Once pretraining proves feasible, everyone jumps in and gets great at optimizing it. But when it comes to unproven areas like long-term memory or continual learning, there’s far less investment. We need more patience for exploring the unknown.

2) Obsession with benchmarks. A positive counter-example is DeepSeek—they’re not chasing leaderboard scores, but asking: “What’s the right thing to do?” Claude isn’t No.1 on most programming benchmarks, yet it’s widely regarded as the best experience. We need to step out of the benchmark mentality and focus on real user value.

Li Guangmi:

Thanks, Shunyu. Junyang, how likely do you think a Chinese company will lead globally in the next 3–5 years? What are the biggest challenges?Lin Junyang:

Tough question—but I’ll approach it through probability and the U.S.–China gap.U.S. compute is 1–2 orders of magnitude ahead. But more critically, OpenAI and others are putting massive compute into next-gen research. Here, we’re barely keeping up with daily delivery, which already exhausts most of our compute.

This creates the contrast between “rich-people innovation” and “poor-people innovation.”

Rich labs can waste GPUs. Poor labs, out of necessity, are forced to tightly optimize algorithms and infrastructure—things rich labs don’t always have the incentive to do. That might be a blessing in disguise.

It also reminds me of a missed opportunity. In 2021, colleagues from Alibaba’s chip team asked me: “Can you predict the model architecture three years out? Should we still bet on Transformers? Will it be multimodal?” Chip tape-outs take three years, so they needed clarity. But I didn’t dare commit. I said, “I don’t even know if I’ll still be at Alibaba in three years.” It was a classic case of talking past each other—and we missed our chance.

Looking back now? It’s still Transformers. Still multimodal. I regret not pushing harder.

So yes, we’re the “poor,” but that might be what drives the next generation of hardware-software innovation.

Another factor is people. Americans have a bold, risk-taking culture. Early electric cars leaked, had safety issues—yet billionaires still invested. In contrast, Chinese capital has historically favored safer bets.

But here’s the good news: our education system is improving.

The Gen Zers in our team are arguably more daring than I am. With their rise—and a better business environment—true innovation is possible, even if our odds aren’t huge.If I had to assign a number? 20%. And I consider that optimistic—because the historical head start is real.

Li Guangmi:

Are you afraid of the widening compute gap?Lin Junyang:

You can’t afford fear in this field—you need a strong heart.

We’re lucky just to be part of this wave.My mindset is: focus on value, not rankings. As long as our models solve real-world problems and create societal value, I’m OK not being the best in the world.

Li Guangmi:

Professor Yang, what’s your take on this AI cycle?Yang Qiang:

Let’s look at the history of the internet. It started in the U.S., but China quickly caught up and led in consumer applications—WeChat is a world-class example.AI is similar—it’s a tool, and Chinese ingenuity will push it to the limit.

I’m especially optimistic about the To C space—China will see an explosion of innovation.

To B is lagging due to weak willingness to pay and conservative enterprise culture. But we can draw lessons from U.S. players like Palantir, who use an ontology + FDE (forward-deployed engineer) model to bridge the gap between general AI and real-world applications.

I believe China will develop a localized version of this—AI-native companies delivering real To B solutions.

Li Guangmi:

Professor Tang, your closing thoughts?Tang Jie:

First, let’s acknowledge reality: there is a gap in foundational research between China and the U.S.But the landscape is shifting.

China’s post-90s and post-2000s generations—this new wave of companies and talent—is leagues ahead of the past.

I sometimes joke that our generation of researchers is the “unluckiest” one— The old-school professors are still going strong, and the Gen Z geniuses are already rising. We’re caught in the middle, like we got skipped over entirely.Jokes aside, China’s real AI opportunity lies in three areas:

1) People – Risk-takers. We have brilliant minds who are willing to take real risks. Look at the 90s/00s generation—like Junyang, Shunyu, the Kimi team—they all have incredible curiosity and drive. They’re betting on uncertain futures. That spirit is invaluable.

2) Environment – Enabling innovation. As Junyang said, compute is tied up in delivery. If the government and institutions can further improve the innovation climate—clarify the role of big firms vs. startups, reduce delivery burdens—these brilliant minds will have more space to focus on core breakthroughs.

3) Mindset – Grit. Don’t wait for a perfect environment—it will never exist. Instead, be grateful. We’re the lucky ones—witnessing the shift from scarcity to abundance. This experience is a form of wealth. If we can persist with a humble heart and keep pushing forward, we may just end up winning.

Li Guangmi:

And a final appeal from me— Let’s give young researchers more compute and more patience. Let them grind away for 3–5 years.

With that time and trust, maybe one day, we’ll witness the rise of China’s very own Ilya Sutskever.

Many thanks for a great and very worthy post, which I have not yet noticed elsewhere, so far. Also a great summary. Great job.